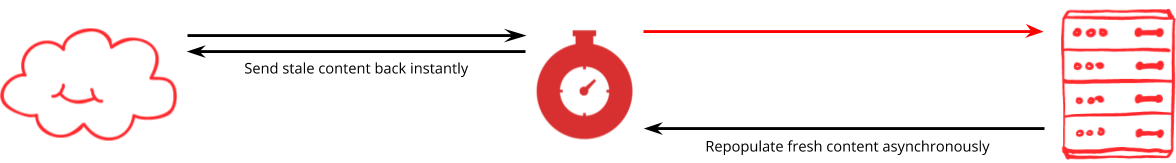

Serving stale

When your servers are down, or if they take a while to generate pages, end users should be able to benefit from being served cached content - even if it's slightly stale.

Stale content is content stored by Fastly that has exceeded its freshness lifetime. Content will sometimes be served by Fastly even though it is stale, such as during a stale-while-revalidate window, or if an origin is sick and stale-if-error is set. For more background on stale content see staleness and revalidation.

Using VCL can modify standard stale-serving rules to improve outcomes in three scenarios:

- Where an origin is erroring (returning undesirable content such as a

500 Internal server error), and stale content exists, serve the stale content instead of the error. - Where an origin is down (unreachable, but not yet marked as sick), and stale content exists, serve the stale content instead of a Fastly-generated error page.

- Where an origin is erroring or down and no stale content exists, serve a custom branded error page rather than an unfiltered error from origin or a Fastly generated error page.

These improved outcomes can be seen in the matrix below:

| Content state | |||||

|---|---|---|---|---|---|

| Fresh | SWR | SIE | None | ||

| Origin state | Healthy | 😀 | 😀 | 😴 | 😴 |

| Erroring | 😀 | 😀 | 😴 | 😐 | |

| Down | 😀 | 😀 | 😴 | 😐 | |

| Sick | 😀 | 😀 | 😀 | 😐 | |

Either the user will see the content they want served from the edge (😀), they will get the content but it will include a blocking fetch to origin (😴), or they will see a custom branded error page (😐). Where the cell has a red triangle, this is a scenario where the user would previously have seen an unfiltered error (😡), which could be either something generated by Fastly or whatever your origin server returned - but thanks to this solution, will instead get the improved outcome.

Let's see how this works.

Instructions

Deal with origins that are erroring

The vcl_fetch subroutine runs when a response starts to be received from an origin server. Use this subroutine to detect responses from origin that are undesirable:

# Response from origin is an errorif (beresp.status >= 500 && beresp.status < 600) {

# There's a stale version available! Serve it. if (stale.exists) { return(deliver_stale); }

# Cache the error for 1s to allow it to be used for any collapsed requests set beresp.cacheable = true; set beresp.ttl = 1s; return(deliver);}Fastly will, by default, accept and serve any response that is syntactically valid HTTP, which includes error responses. We'll even do this in preference to serving a stale object that we have in cache. So when an origin server returns an undesirable response like a 500 Internal Server Error, and a good version of the content still exists in cache (stale.exists), a simple improvement that can be made is to deliver that stale version (return(deliver_stale)) instead of the bad content.

Responses like 500 Internal Server Error are valid HTTP messages so although the origin is returning "errors", it's still formatting them correctly and doing it in a timely fashion, so will not trigger an error handling flow within Fastly. Fastly will move directly to vcl_error if the origin is unreachable. This is the difference between an origin that is erroring and an origin that is down. We'll look at origins that are down in the next section.

If the origin serves an undesirable response, and a stale version does not exist, then to avoid serving the undesirable response to the end user, it needs to be replaced with some custom error message. To do this, first mark the response as cacheable and then allow it to be cached for 1 second. This will ensure any requests queued behind it due to request collapsing are handled as well.

HINT: When multiple requests are received at the same time for the same URL, we send only one of those requests to your origin server and we hold the rest in a queue using a process called request collapsing. In many cases, this vastly reduces origin load, but it depends on the response from the origin being reusable for all the waiting requests. To be reusable, the response must be cacheable (even if for only 1s). If it's not reusable, it can also be used to create a marker called a hit-for-pass to tell Fastly not to queue up future requests for the same resource.

Typically, errors are not cacheable and don't create hit-for-pass markers, so an origin that starts erroring might be asked to handle each incoming request sequentially, one at a time, causing a huge backlog and ultimately timeouts for the end user.

Fortunately, you can make an error response cacheable by setting beresp.cacheable to true.

To allow the cacheable error to match all the requests in the queue, use return(deliver). You will be able to catch up with it later to replace it with something else.

Deal with origins that are down

You just learned that an undesirable response from origin, like a 500 Internal server error, is not an error to Fastly, because it's a valid HTTP message. But it's also possible that the origin is unable to serve a valid HTTP response: perhaps it's unreachable, refusing connections, cannot negotiate an acceptable TLS session, timing out (see bereq.first_byte_timeout), or responding with garbled data that cannot be interpreted as HTTP. These origins are considered to be down.

In these cases, Fastly will not execute vcl_fetch and will move directly to vcl_error with obj.status set to 503.

if (obj.status >= 500 && obj.status < 600) { if (stale.exists) { return(deliver_stale); } return(deliver);}There are lots of possible reasons for ending up in vcl_error, including manually triggered errors that may have a custom status to trigger a specific synthetic response, so the first step is to isolate requests that are in vcl_error with a status code in the 5XX range.

If the error was triggered by Fastly automatically, then vcl_fetch has not run and the stale.exists check hasn't happened, so that should be done here for this scenario. If stale content exists, return(deliver_stale) to serve it.

Where there is no stale content available, this will be another case where you will want to replace the error with something customized. By doing return(deliver) from here, you set up the Fastly-generated error to be delivered, and along with the origin errors that we dealt with in the previous section, you'll be able to intercept it before it leaves Fastly.

Consider effects of shielding

Before customizing the error that leaves Fastly, consider the effects of shielding. If you have shielding enabled on your service the request may pass through more than one Fastly POP before it attempts to connect to origin. This has a few effects on serving stale content that are worth considering, good and bad:

- Bad - Inadvertent caching of stale content: If an object has been purged using soft purge, or your service configuration has manipulated the object's cache TTL, then it's possible that the shield POP will serve it, and when it gets to the edge POP, it will get re-cached as if it is fresh for the remainder of its

max-age. This can be prevented using VCL. See staleness and revalidation for details. - Good - Allowing request collapsing in more scenarios: When an origin server is down, the resulting VCL flow does not support servicing of queued requests, leading to each queued request potentially being tried against the origin sequentially. Shielding means that even if the shield POP doesn't have a stale object available, it can at least provide a valid HTTP response to the edge POP, allowing the edge to handle the response as if it is from an erroring origin, which does allow queued requests to be serviced.

This request collapsing benefit prevents 'serialization' of the request queue (i.e., sending requests to origin one-at-a-time). Earlier, you learned that when origins are erroring, caching the error response briefly will allow it to match all requests that have queued up due to request collapsing. Unfortunately, if the origin is down, the Fastly-generated synthetic response cannot be made to satisfy queued requests. In this situation, requests might be sent to origin sequentially, one at a time, because each request triggers an error, and can't satisfy the requests in the queue. The queue is cancelled, but then one of the queued requests will be sent to origin and the others will immediately form a new queue behind that one.

You worked around this with origin errors because the origin provided something that could be cached. While Fastly-generated errors can't be cached, with shielding enabled an origin that is down will result in the shield POP returning an error page to the edge POP. The edge POP will see that as if it is an origin error rather than a Fastly-generated error, and will therefore allow for queued requests to be handled in bulk.

This is rather complex, and in practice may affect only sites with extremely high traffic, but enabling it is as simple as turning on shielding, and making sure to handle the effect of receiving potentially stale content at the edge, as described above.

Replace error output with custom error pages

To recap, you have handled situations in which the origin is erroring, and situations in which the origin is down. In both cases, you check whether a stale version of the content is available, and if so, use it. That leaves scenarios in which no stale version is available, and in both cases, the VCL flow ends up in vcl_deliver, where you have a final opportunity to intervene before a response is dispatched to the end user. Whether the response we're about to send is an origin error, or a Fastly generated one, it will pass through vcl_deliver.

First, detect all such responses (status codes in the 5XX range), and check that you haven't already customized it. If it's an error you haven't customized yet, set a flag to say that it is going to be a custom error, and use restart to send the control flow back to vcl_recv. You can also use custom headers here to capture key details of the error.

if ((resp.status >= 500 && resp.status < 600) && !req.http.x-branded-error) { set req.http.x-branded-error = "true"; set req.http.x-error-status = resp.status; set req.http.x-error-resp = resp.response; restart;}Now, the request begins a new VCL flow, in which you have the opportunity to create a custom error response. Start by matching the x-branded-error header in vcl_recv and triggering a custom error flow using a non-standard HTTP status:

if (req.http.x-branded-error) { error 600;}This special status code can then be matched in vcl_error (place this code before your existing vcl_error code):

if (obj.status == 600 && req.http.x-branded-error) { set obj.status = 503; set obj.response = "Service unavailable"; set obj.http.Content-Type = "text/html"; set obj.http.X-Debug-Original-Error = req.http.x-error-status + " " + req.http.x-error-resp; synthetic {" <!DOCTYPE html> <html> <body> Sorry, we are currently experiencing problems fulfilling your request. We've logged this problem and we'll try to resolve it as quickly as possible. </body> </html> "}; return(deliver);}This special response then passes into vcl_deliver where it will skip the code that performs the restart - because it still has the x-branded-error header - and will finally be served to the end user. You have now served stale content whenever possible and custom, branded content when stale content is not available.

Log the error (optional)

Requests that end up with a stale object being served will be flagged as such in the fastly_info.state variable and will run vcl_log as normal, providing an opportunity to log the incident. However, you may want to log explicitly when a synthetic error is served, in which case add a line such as this to the vcl_error logic you already have:

log "syslog " + req.service_id + " log-name :: We served a synthetic 503 for " + req.url;Set some stale period defaults (optional)

The existence of stale content, and therefore the ability to discover it with stale.exists, depends on the content having a positive beresp.stale_if_error duration. An effective way to set this is by using the stale-if-error and stale-while-revalidate directives of the Cache-Control header, which you should set on the responses from your origin. However, if you're unable to do this at your origin, you could instead set them in VCL. Add this to the end of the vcl_fetch subroutine:

if (beresp.ttl > 0s) { set beresp.stale_while_revalidate = 60s; set beresp.stale_if_error = 86400s;}It's a good idea to set a short revalidation window, and a longer error window, because while you may not want to serve out of date content for very long, if the origin server is down, it's either that or an error message.

Related content

Quick install

The embedded fiddle below shows the complete solution. Feel free to run it, and click the INSTALL tab to customize and upload it to a Fastly service in your account:

Once you have the code in your service, you can further customize it if you need to.

All code on this page is provided under both the BSD and MIT open source licenses.