We recently announced Lucet, our native WebAssembly compiler and runtime, and have been so excited by the community’s interest. In the announcement, we mentioned that Lucet can instantiate WebAssembly modules in under 50 microseconds, showing that this new technology for faster, safer execution still handles the challenges of scaling to Fastly’s edge cloud platform. In this post, we’ll explain how the Lucet runtime system works by sharing what happens at each step of the lifecycle of a WebAssembly program running on Lucet. We’ll also detail how we keep the overhead of each step as low as possible.

Ahead-of-time compilation

In a web browser, the time between downloading the WebAssembly to running the program is part of the delay a user experiences when loading a page. Browser execution engines use just-in-time compilation to begin producing fast, native code quickly — sometimes even before the WebAssembly program is finished downloading. For server-side applications like Terrarium that run the same program per request for many requests, the speed to compile is far less important than the set-up time for each request, and the performance of the generated code.

Lucet includes an ahead-of-time compiler, lucetc, built on top of the Cranelift code generator created by Mozilla for use in Firefox’s WebAssembly and JavaScript JIT engines. WebAssembly programs are compiled by lucetc into native x86-64 shared object files, which are then ready to be loaded and run by the Lucet runtime. In a server like Terrarium, this step is only performed once and the cost is amortized over the lifetime of the server, which affords more time to spend on optimizations in the compiled program.

Memory regions

While some Lucet applications, like the lucet-wasi command-line interface, are designed to run one WebAssembly program at a time, we built Lucet to support massively concurrent execution at Fastly’s scale. Each Lucet instance needs a certain amount of memory for the WebAssembly heap and global variables, as well as the call stack and a 4KiB page for instance metadata.

Rather than allocating and freeing memory from the operating system every time we create and destroy an instance, we allocate a memory region with reusable slots for backing instances. Instances are created by taking a free slot from the region, which is then zeroed and returned to the region once the instance is destroyed. Following the pattern of ahead-of-time versus just-in-time compilation, we choose the approach that lets us amortize one expensive memory mapping operation over the life of a server performing cheaper slot reuse operations repeatedly.

Instantiation

To instantiate a Lucet program, we must:

dynamically load the

lucetc-compiled shared objecttake a free slot from a memory region

set up the instance heap with the correct permissions

copy the initial heap values from the shared object.

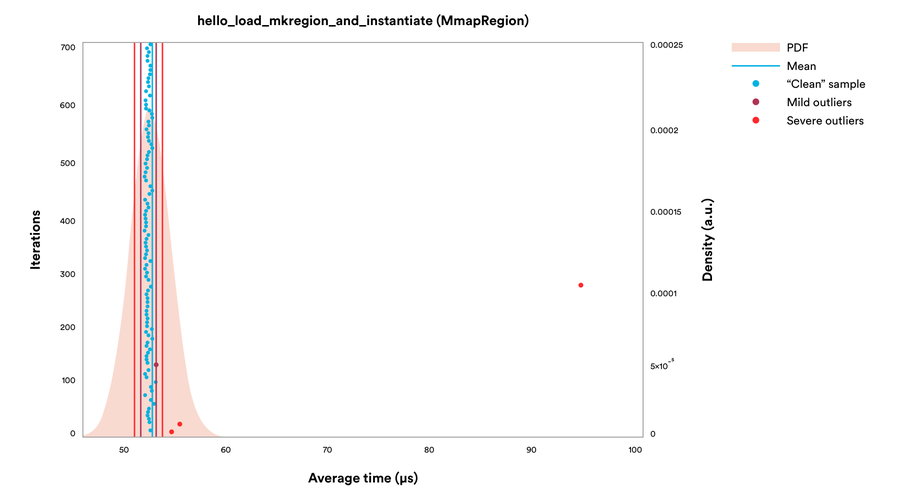

On our benchmarking system, a tool like lucet-wasi takes an average of 52µs to load and instantiate a WASI “Hello World” program, including the time taken to create a memory region with a single slot:

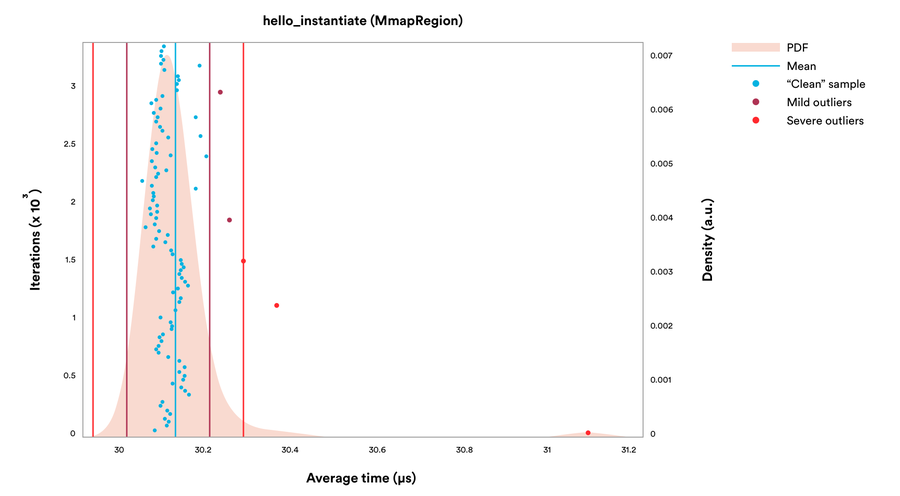

Of course, in a server environment like Terrarium, loading the shared object and creating the memory region are done once. On our benchmarking system, performing the steps of acquiring the memory slot and populating the heap takes an average of 30µs:

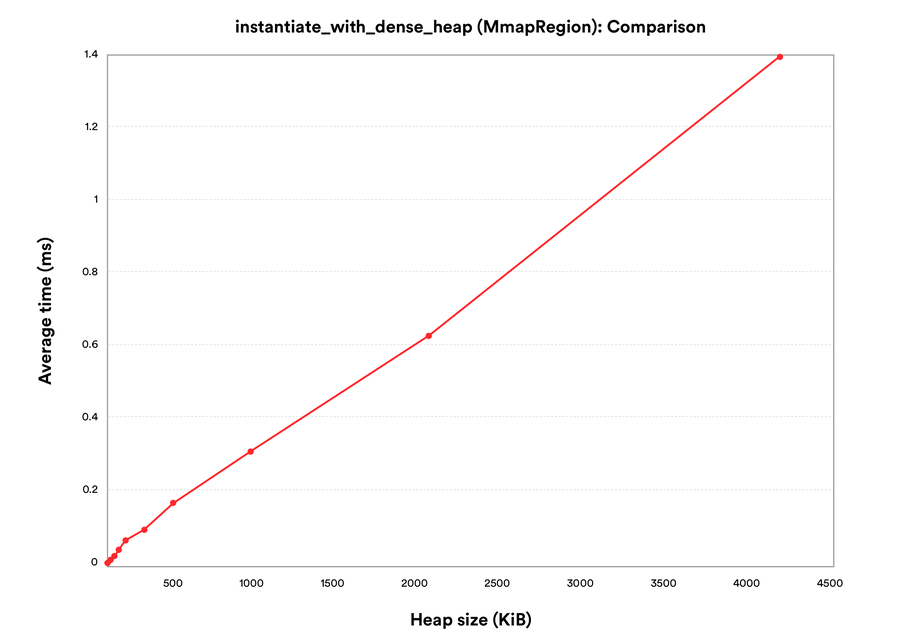

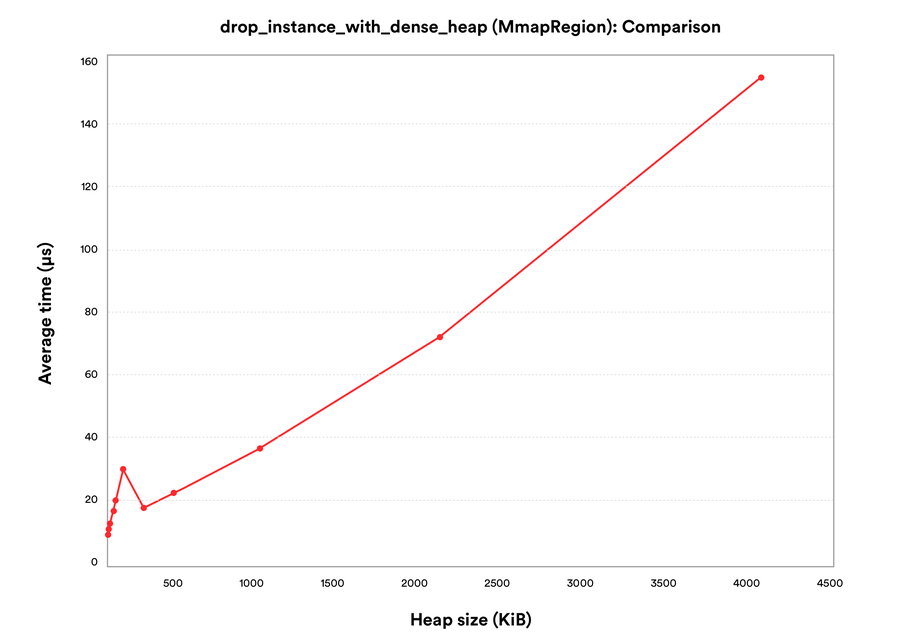

Our “Hello World” program doesn’t have too much initial heap data, so the majority of the time is spent doing the bookkeeping with the memory slot and its permissions. If we try some synthetic programs with different initial heap sizes, we see that the time is dominated by copying the initial heap values into place, while the time to instantiate grows linearly with the heap size:

Some compilers that target WebAssembly, such as the experimental Go backend, produce modules with very large initial heaps in order to support garbage collection. Fortunately, if most of an initial heap is zero, we can save some time on instantiation. In this run, we have synthetic programs with sparse initial heaps that have non-zero data only in one out of every eight pages:

In both cases, the instantiation time grows linearly with the size of the initial heap, but the sparse heaps only take one eighth the time of the dense heaps.

Running an instance

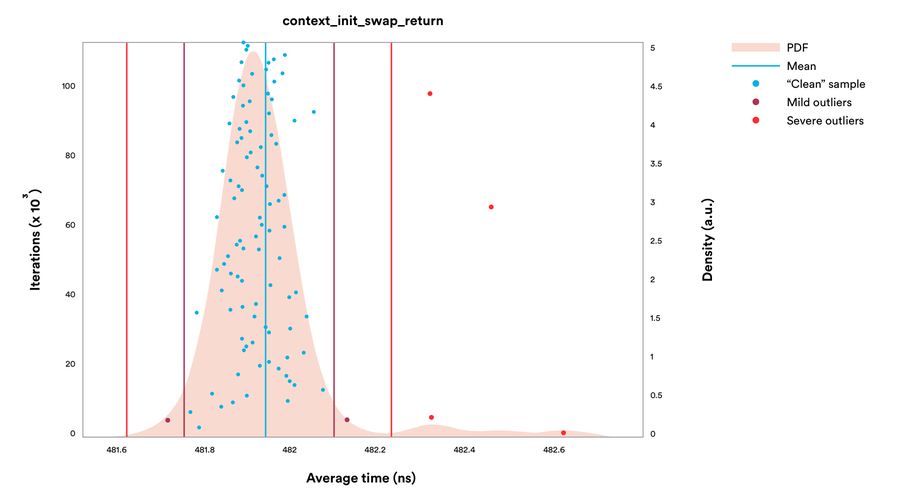

Once we have an instance, we can run the WebAssembly guest functions it exports. Rather than spawning a new Linux process or even a new thread, we instead perform a context switch on the host application’s thread, so that it begins running the guest function directly. In Lucet, this involves setting up the function arguments in the guest registers and call stack, saving the current thread’s signal mask, and then directly swapping out the host registers and stack for the guest’s. The context switch is extremely fast — half a microsecond on average to swap to, and then return from, a trivial function:

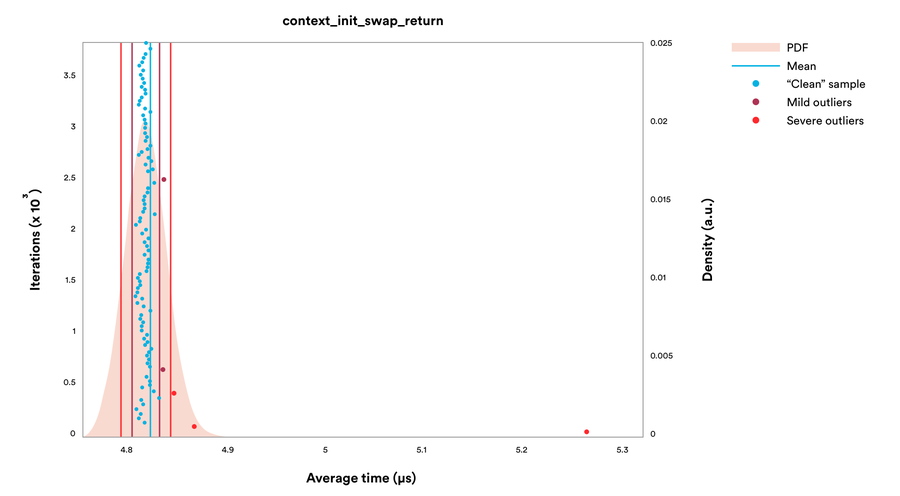

Extra system calls are required for the first Lucet instance that runs in a process in order to install the signal handler. Lucet uses a custom signal handler for exceptional conditions such as division by zero, so that errors are isolated to the instance that raises them. Continuing the theme with compilation and memory region creation, this is a one-time cost for most server applications, but even when this handler must be installed, instances run in an average of 4.9µs:

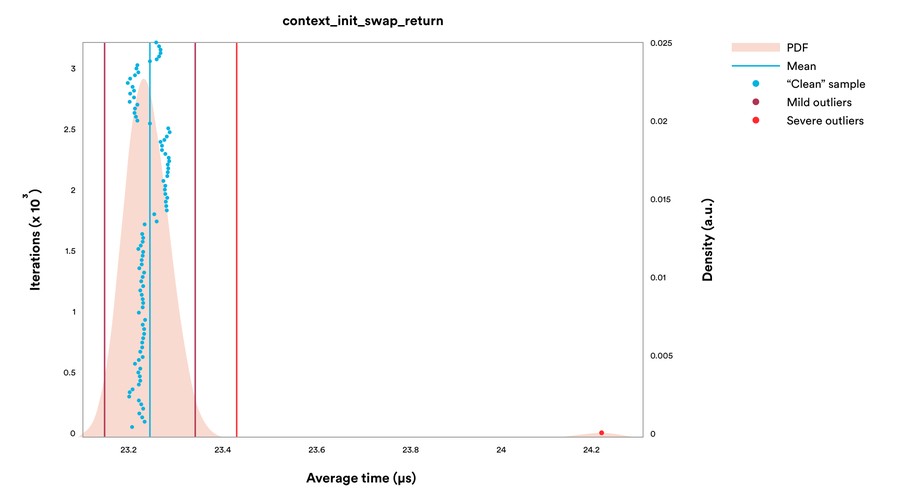

Tearing down

Once a server like Terrarium has completed a request, it must reset or destroy the instance that serviced it in order to prevent any state from leaking between requests. When destroying an instance, Lucet resets the memory protection and zeroes the memory in the instance’s slot, and then returns it to the free slot list of the memory region in an average of 23µs:

Because Linux can zero pages on demand using madvise(2), this takes around 35-40µs/MiB of heap used by a synthetic program:

Total runtime system overhead

Putting the steps together, we can get an idea of how much runtime system overhead is involved in executing WebAssembly programs with Lucet. The memory overhead is 4KiB for metadata, plus a configurable amount of memory for the call stack. The speed overhead varies. It depends on how much heap space the program uses and whether the workload is suitable for amortizing the costs of ahead-of-time compilation and creating the memory region. But, we’ve seen in this post that to run a “Hello World” program, Lucet currently takes:

30µs for instantiation

5µs for context switching

23µs for destruction

The scale of Fastly’s edge cloud demands very high performance at every stage of handling requests. Lucet enables safer, more sophisticated logic at the edge while adding less than 60µs of setup and teardown overhead.

The rest of the performance story

This post explained the steps involved in running a WebAssembly program with Lucet and how much overhead is introduced by the Lucet runtime system. In a future post, we will take a closer look at the performance of the code generated by lucetc, including optimizations made possible by close co-development of a compiler and runtime system.

In the meantime, please check out the Lucet GitHub repository and let us know what you think!

Benchmarking notes

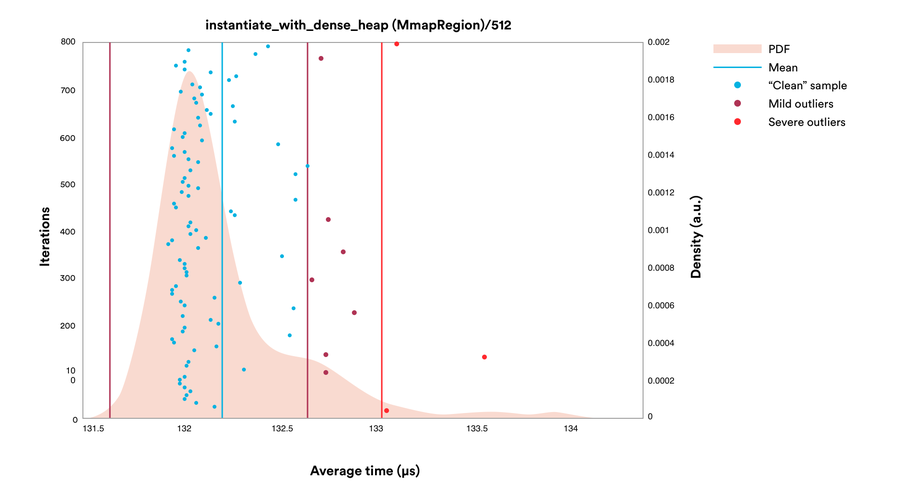

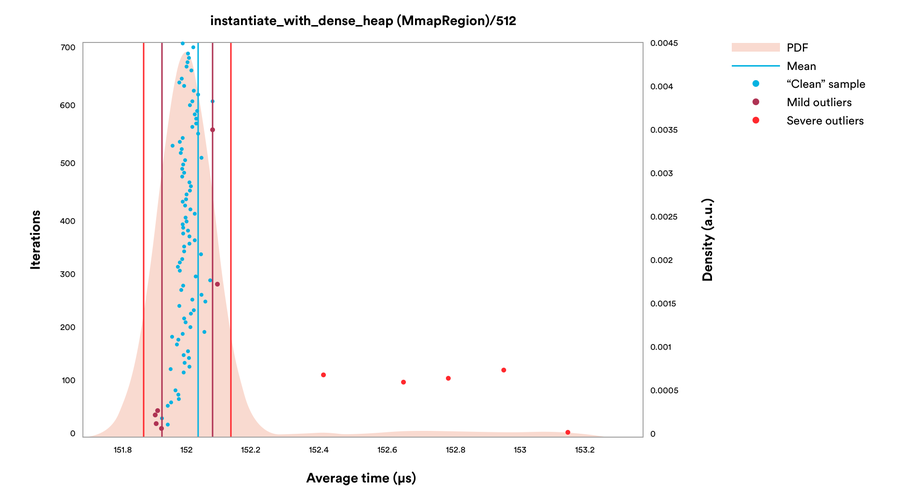

The performance figures listed above were gathered using the excellent Rust port of criterion to drive our benchmark suite, which you can find in the Lucet repository on GitHub. The benchmarking was done on a dedicated 64-bit Ubuntu 16.04 system with a dual-core 3.50GHz Intel Core i7-7567U processor, with Hyper-Threading and Turbo Boost disabled for consistency; while these features can improve performance in real-world settings, they make our benchmarks quite a bit noisier. For example, the probability density graph of our 512KiB heap instantiation looks like this with the features turned off:

But with both enabled, it looks like this (though note the different x-axis):