In “How to solve anything” parts 1 and 2, we outlined how to use Varnish Configuration Language (VCL) to address some of your more challenging problems. In this post, we’ll discuss how Andrew Betts of the Financial Times uses advanced VCL to securely cache and serve authenticated and authorized content, and set up feature flags.

Authentication

To date, there has not been a method to serve authenticated and authorized content out of cache, which greatly limits the value of a content delivery network for this type of content and hurts performance and scalability. With Fastly and VCL, once a user has been authenticated, the authorization and the content can be cached, providing extremely fast and scalable content delivery.

Implementing integration with your federated identity system entirely in VCL helps if:

You have a federated login system using a protocol like OAuth.

You want to annotate requests with a simple verified auth state.

Authentication is something “obviously everyone needs to do in their application,” Andrew noted, “but it’s kind of a tedious thing.” By writing request headers in VCL to include the username of the user making the request, FT can effectively authenticate a request without having to write it into a back end as a microservice. They put the authentication logic onto Fastly:

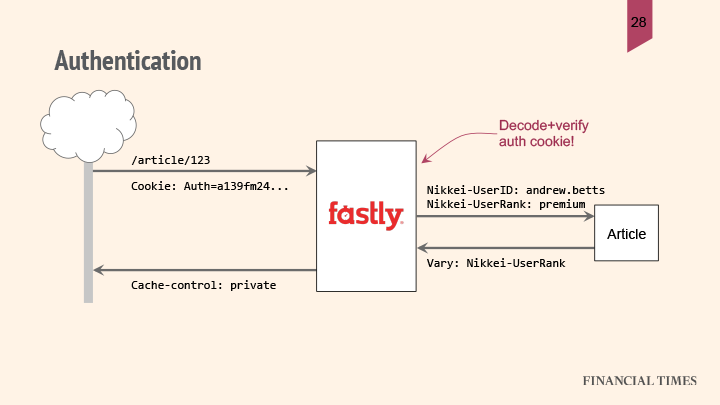

So if FT receives a cookie with their authentication token, they can decode and verify the signature of that token in the CDN, and then split out the various parts of the authentication data into separate request headers that they send to the back end.

The reason FT splits the authentication data into separate headers is because they have different levels of granularity. For example, the user ID contains a lot of information; if the back end needs to use the user ID to generate the response, then the response has to be cached individually for each user. On the other hand, if the back end only needs a user’s role, rank, or group membership in order to generate this response, then the response is pretty cacheable — it can be reused for multiple other users. FT uses the Vary header to instruct Fastly which part of that authentication token they actually used and therefore which parts need to be taken into account when they cache the variations of that cached object.

By doing authentication at the edge, FT can avoid asking the origin to verify the user's identity, protecting the origin and reducing latency by eliminating an extra trip before responding to the user.

Tools & techniques

Get a cookie by name:

req.http.Cookie:MySiteAuthPer Andrew, this is a “really nice syntax for digging into headers and picking out subfields,” which is in turn really useful for picking out individual cookies for the cookie header.Base64 decoding functions (if your particular flavor of auth cookie uses Base64 encoding):

digest.base64url_decode(), digest.base64_decodeExtract the parts of a JSON Web Token (JWT):

regsub({{cookie}}, "(^[^\.]+)\.[^\.]+\.[^\.]+$", "\1");Regsub enables you to dig various bits out of arbitrarily formatted strings.Set trusted headers for back end use:

req.http.Nikkei-UserID = regsub({{jwt}}, {{pattern}}, "\1");Check JWT signature (“crypto functions to check your signatures”):

digest.hmac_sha256_base64()

Note that the last step, checking the signature, is of the utmost importance. Without this verification, people could forge their credentials quite easily.

Example VCL:

if (req.http.Cookie:NikkeiAuth) {

set req.http.tmpHeader = regsub(req.http.Cookie:NikkeiAuth,

"(^[^\.]+)\.[^\.]+\.[^\.]+$", "\1");

set req.http.tmpPayload = regsub(req.http.Cookie:NikkeiAuth,

"^[^\.]+\.([^\.]+)\.[^\.]+$", "\1");

set req.http.tmpRequestSig = digest.base64url_decode(

regsub(req.http.Cookie:NikkeiAuth, "^[^\.]+\.[^\.]+\.([^\.]+)$", "\1")

);

set req.http.tmpCorrectSig = digest.base64_decode(

digest.hmac_sha256_base64("{{jwt_secret}}", req.http.tmpHeader "." req.http.tmpPayload)

);

if (req.http.tmpRequestSig != req.http.tmpCorrectSig) {

error 754 "/login; NikkeiAuth=deleted; expires=Thu, 01 Jan 1970 00:00:00 GMT";

}

set req.http.tmpPayload = digest.base64_decode(req.http.tmpPayload);

set req.http.Nikkei-UserID = regsub(req.http.tmpPayload, {"^.*?"sub"\s*:\s*"(\w+)".*?$"}, "\1");

set req.http.Nikkei-Rank = regsub(req.http.tmpPayload, {"^.*?"ds_rank"\s*:\s*"(\w+)".*?$"}, "\1");

unset req.http.base64_header;

unset req.http.base64_payload;

unset req.http.signature;

unset req.http.valid_signature;

unset req.http.payload;

} else {

set req.http.Nikkei-UserID = "anonymous";

set req.http.Nikkei-Rank = "anonymous";

}Feature flags

Setting up feature flags lets you do dark deployments and easy A/B testing without impacting front end performance or cache efficiency, helping you:

Serve different versions of your site to different users.

Test new features internally on prod before releasing to the world.

Similar to serving authenticated content described above, this has always been a challenge with cached content.

Enabling feature flags lets FT separate code deployments, which they do many times a day, from feature releases, which they do when they’re “good and ready.” They deploy code all the time, but this allows for features to be in production before they turn them on for all users.

Using a tool called the feature toggler, Andrew sets flag values into an override cookie, which changes his own experience on the production site without affecting anyone else.

Features flags are also a good way of running A/B tests — FT can start by releasing a feature that no one will see, and turn it on in production on a case-by-case basis to let their team try it out. When they’re happy that the feature is working, they can “dial it up to 1% or 10% of the audience, then dial it up to 100% and leave it there.” Eventually they’ll remove the feature flag altogether, because the feature is critical and there’s no need to turn it off.

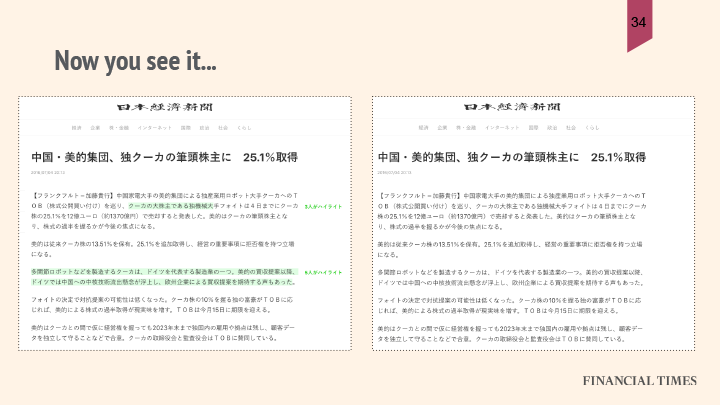

Below is an example of a page with and without a highlights feature (like you’d see on Medium):

Here’s what you need to implement feature flags:

A flags registry — “A JSON file will be fine.” Include all possible values of each flag and percentage of the audience it applies to, and publish it statically — “S3 is good for that.”

A flag toggler tool to read the JSON, render a table, and write an override cookie with chosen values.

An API to read the JSON, respond to requests by calculating a user’s position number on a 0-100 line, and match them with appropriate flag values. (That is, assign appropriate values to users based on user ID.)

VCL to sew it all together.

Most feature flags will be one value assigned to all users, but a few users might be participating in A/B tests, in which case FT will assign different values to different users depending on user ID.

Here’s how the flow works:

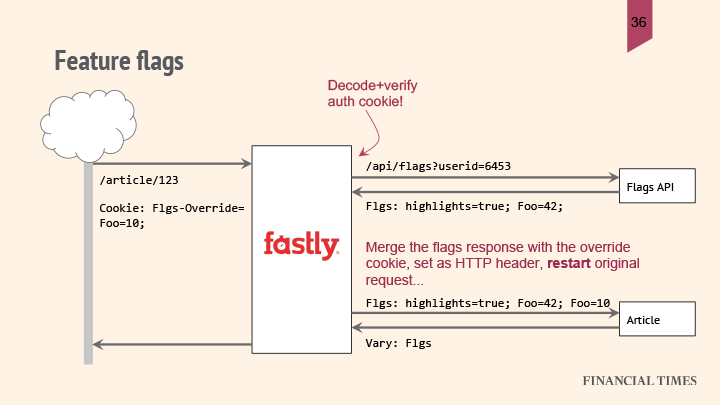

You start with a request for an article — using the authentication pattern from earlier, FT can determine the user ID they will use to make a preflight request to their flags API. Their flags API will figure out where a user is in terms of their A/B buckets, and will allocate values for each of their feature flags.

The flags API sends back a list of the flags enabled for that particular user, which will be transferred from the preflight response to a request header in the original request. They’ll also add on to that header any overrides they received in a cookie. The request then goes through the regular flow: lookup in the cache, and if it’s not there, it’s fetched from the backend.

The overrides from the cookie might mean that they actually define the same feature flag more than once, but that’s handled by the origin only paying attention to the last value for each particular flag in the string.

And when the origin (in the above example, the article service) uses any of those flags, it returns a Vary header to tell Fastly to cache different versions of the response that have different flag headers separately.

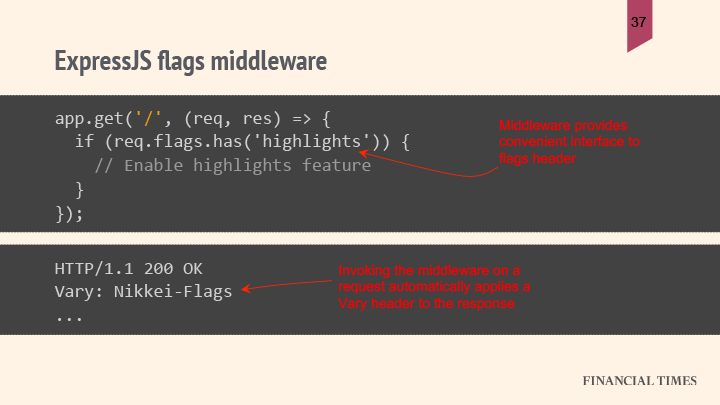

Using expressjs middleware, FT can check which flags are enabled for a given request:

The middleware will know that they’ve accessed the flag data, and knows it has to set the Vary header on the response. (This way they don’t have to remember to set the Vary header — if they forgot, “that would be pretty bad.”) At the same time, if they don’t use any of the flag information generating the response, they don’t need to set a Vary header, giving them better cache performance.

Watch the full video of Andrew’s presentation below.