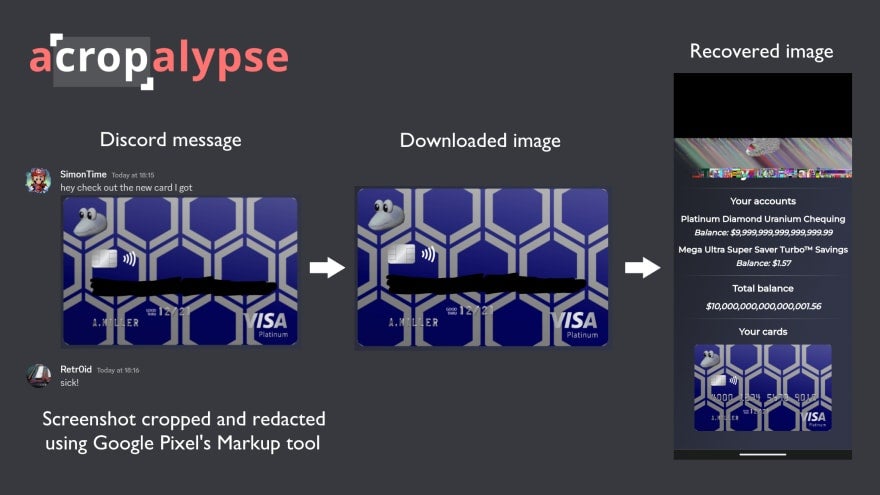

What's the a"crop"alypse? Last week, Simon Aaarons and David Buchanan posted their discovery that images cropped using Android's Markup editor app often hid within them large portions of the original uncropped image. This is a privacy problem because users don't understand that the portion of the image they cropped out is potentially still there. This could include personal details, account names, content within notifications, and myriad other bits of sensitive information that might happen to be on some part of a phone screen that a user crops out of a screenshot. David went on to suggest that CDNs could transparently mitigate the vulnerability by deploying a filter at the edge, taking the responsibility off the user and making the internet a better place.

Challenge accepted!

Even though the root of the problem has already been addressed by a fix in the Markup editor app, there is a lot of damage that cannot be undone - images cropped before the fix are still live across the internet’s platforms and sites, and the fix to the Markup app cannot retroactively remove the “cropped” content from images that have already been shared off the device. An improperly “cropped” image that was uploaded to a social platform before the fix is still out there, and still poses a privacy risk for the author.

Fastly's Compute is a good place to solve problems like this quickly. A fix could strip the “cropped” data from any images that pass through apps and services that are delivered through the Fastly edge.

The easy answer

We already have an Image optimization feature, and we've verified that any image that passes through our optimizer will be stripped of trailing content. That means if you use IO on your Fastly service, you're already filtering images such that the acropalypse data will be removed.

However, not everyone uses image optimization with their Fastly service. So what if you don't?

Understanding the problem

PNG files have a well known byte sequence that identifies them, the "magic bytes", which are these:

89 50 4E 47You can see this in a hexdump of any PNG file:

○ head -c 100 Screenshot\ 2023-03-01\ at\ 10.35.01.png | hexdump -C

00000000 89 50 4e 47 0d 0a 1a 0a 00 00 00 0d 49 48 44 52 |.PNG........IHDR|

00000010 00 00 06 cc 00 00 04 e6 08 06 00 00 00 85 ef 84 |................|

00000020 f2 00 00 0a aa 69 43 43 50 49 43 43 20 50 72 6f |.....iCCPICC Pro|

00000030 66 69 6c 65 00 00 48 89 95 97 07 50 53 e9 16 c7 |file..H....PS...|

00000040 bf 7b d3 43 42 49 42 28 52 42 6f 82 74 02 48 09 |.{.CBIB(RBo.t.H.|

00000050 a1 85 de 9b a8 84 24 40 28 21 26 04 05 bb b2 b8 |......$@(!&.....|

00000060 82 6b 41 44 |.kAD|

00000064PNG files end with a similar marker, called IEND, which is also easy to spot in the byte stream - a sequence of four null bytes and the ASCII characters IEND:

○ tail -c 100 Screenshot\ 2023-03-01\ at\ 10.35.01.png | hexdump -C

00000000 98 cf ff 9d 2f 79 c0 7b c3 ec de 7b ef 8d 1b 96 |..../y.{...{....|

00000010 2c 0b 1e f0 24 c3 a2 32 f9 4a fd 95 c6 3a 7c 13 |,...$..2.J...:|.|

00000020 a1 1a 78 6c 47 70 c6 fb dc 41 dd f2 8d 4b 79 d1 |..xlGp...A...Ky.|

00000030 35 f2 6b 89 ef f0 d8 3e e8 ed 23 70 c6 3f 5e 6f |5.k....>..#p.?^o|

00000040 6f 98 75 80 4b 7c da 01 1e 1c 72 80 93 a8 ff 37 |o.u.K|....r....7|

00000050 c8 1a 61 82 9a 74 43 f6 00 00 00 00 49 45 4e 44 |..a..tC.....IEND|

00000060 ae 42 60 82 |.B`.|The problem is that some tools, notably the markup tool on Android, when cropping an image, will include the original image data in the leftover space at the the end of the file.

Simon's post helpfully illustrates the issue:

OK, so how can we stop this from leaking our user's private data?

Compute to the rescue

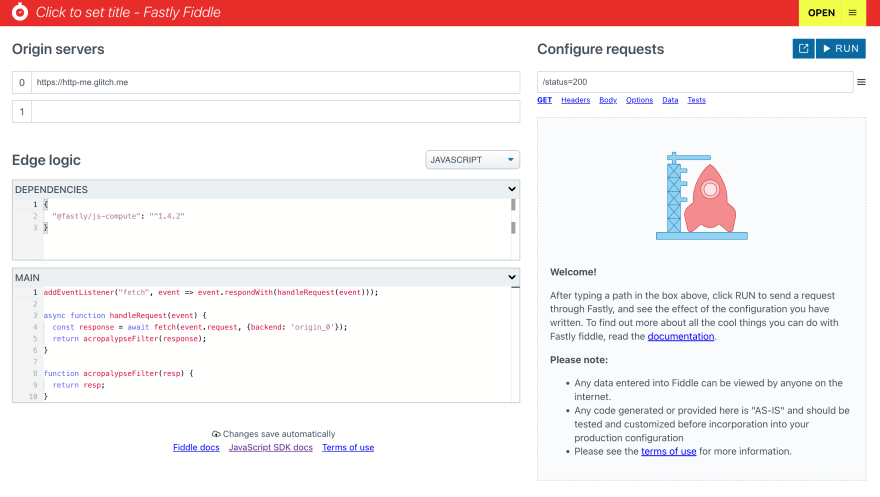

Let's crack open Fastly Fiddle, and write some code at the edge. To start, I'll make a basic request handler, which sends the request to a backend, and passes the response back to the client.

That looks like this:

addEventListener("fetch", event => event.respondWith(handleRequest(event)));

async function handleRequest(event) {

const response = await fetch(event.request, {backend: 'origin_0'});

return acropalypseFilter(response);

}

function acropalypseFilter(resp) {

return resp;

}And in Fiddle you'll see this:

I can configure my fiddle to use http-me.glitch.me as a backend, a server Fastly maintains to provide responses that can be handy for testing this kind of thing. The path can be set to /image-png to get HTTP-me to give me a PNG image.

Now I need to flesh out my acropalypseFilter function. Here is what I came up with:

function acropalypseFilter(response) {

// Define the byte sequences for the PNG magic bytes and the IEND marker

// that identifies the end of the image

const pngMarker = new Uint8Array([0x89,0x50,0x4e,0x47]);

const pngIEND = new Uint8Array([0x00,0x00,0x00,0x00,0x49,0x45,0x4e,0x44]);

// Define an async function so we can use await when processing the stream

async function processChunks(reader, writer) {

let isPNG = false;

let isIEND = false;

while (true) {

// Fetch a chunk from the input stream

const { done, value: chunk } = await reader.read();

if (done) break;

// If we have not yet found a PNG marker, see if there's one

// in this chunk. If there is, we have a PNG that is potentially

// vulnerable

if (!isPNG && seqFind(chunk, pngMarker) !== -1) {

console.log("It's a PNG");

isPNG = true;

}

// If we know we're past the end of the PNG, any remaining data

// in the file is the hidden data we want to remove. Since we already

// sent the Content-Length header, we'll pad the rest of the response

// with zeroes.

if (isIEND) {

writer.write(new Uint8Array(chunk.length));

continue;

// If it's a PNG but we're yet to get to the end of it...

} else if (isPNG) {

// See if this chunk contains the IEND marker

// If so, output the data up to the marker and replace the rest of the

// chunk with zeroes.

const idx = seqFind(chunk, pngIEND);

if (idx > 0) {

console.log(`Found IEND at ${idx}`);

isIEND = true;

writer.write(chunk.slice(0, idx));

writer.write(new Uint8Array(chunk.length-idx));

continue;

}

}

// Either we're not dealing with a PNG, or we're in a PNG but have not

// reached the IEND marker yet. Either way, we can simply copy the

// chunk directly to the output.

writer.write(chunk);

}

// After the input stream ends, we should do cleanup.

writer.close();

reader.releaseLock();

}

if (response.body) {

const {readable, writable} = new TransformStream();

const writer = writable.getWriter();

const reader = response.body.getReader();

processChunks(reader, writer);

return new Response(readable, response);

}

return response;

}Let's step through this:

We set up some variables to hold the byte sequences that will tell us whether the response is a PNG and also whether we've reached the end of it.

Skipping to the bottom, the

if (response.body)block creates a TransformStream, and returns a new Response that consumes the readable side of it. Now we need to read from the backend response, and push data into the writable side of the TransformStream.

Since we want to return a

Responsefrom theacropalypseFilterfunction, theacropalypseFilterfunction cannot be async (if it was declaredasyncit would return a Promise, not a Response). So instead, I've defined a child function,processChunks, which can be async. We can also call it without awaiting the Promise, because it's OK to return the Response before the stream has ended (better, actually!)

In

processChunks, we create a read loop and read chunks of data from the backend response. We are looking for the magic marker that says the file is a PNG. If we find it, we're then looking for the marker that indicates the end of the PNG. If we find that, then everything after that point is replaced by zeros. This is the key bit that defeats the data leak.

But, why not just cut off the response at that point? The problem is, we've already sent the headers of the response, and most likely, the response headers included a Content-Length, so we need to emit that much data.

One other thing. The chunk that comes out of the reader.read() method is an ArrayBuffer, and we need to search it to find the special sequences of bytes we're looking for. There's no way to do this natively in JavaScript so I invented a function to search for a sequence of elements in an array:

// Find a sequence of elements in an array

function seqFind(input, target, fromIndex = 0) {

const index = input.indexOf(target[0], fromIndex);

if (target.length === 1 || index === -1) return index;

let i, j;

for (i = index, j = 0; j < target.length && i < input.length; i++, j++) {

if (input[i] !== target[j]) {

return seqFind(input, target, index + 1);

}

}

return (i === index + target.length) ? index : -1;

}There's definitely ways to improve this, but for a quick exercise in what can be done with edge computing, it's really cool.

Check out my fiddle here and if you like, clone it and make your own version.